As software engineers gain seniority, we usually find ourselves shifting from writing code to reviewing it. With broader impact and scope comes the responsibility to review more pull requests across different projects and teams. The question becomes: how do we make the best use of our limited review time? Which PRs deserve our attention first?

I’ve been skeptical about AI tools for writing business code. After trying numerous products and tools, I found them to be mostly a waste of time when it comes to actual development work. The promises rarely match the reality, especially for complex, domain-specific code like the Datadog Java tracer where no other Open Source projects exist for the AI to get inspiration from.

But here’s where it gets interesting: AI is great at understanding concepts and somewhat good reasoning about priorities. So instead of trying to make AI write code, I decided to leverage its strengths for something it’s actually good at: helping me choose which pull requests need my attention most.

The Challenge: Too Many PRs, Too Little Time

Working on large codebases like DataDog’s dd-trace-java library, the volume of pull requests can be overwhelming. Not all PRs are created equal: some touch critical core functionality, others introduce breaking API changes, while some are simple documentation updates. The challenge is quickly identifying which ones require senior review expertise.

Traditional approaches rely on GitHub labels, assignees, or simple heuristics. But these often miss nuanced factors like:

- Impact on core tracing functionality – As the code base is historically tightly coupled around the Tracing product, it gets tricky to asses when a product update will impact Tracing and requires extra care

- Backward compatibility implications – One of our main development rule is “don’t hurt customers” and the Java ecosystem is so vast you can’t assume how the library will be used. It implies any behavior changes to be gated behind a feature flag

- Performance and security considerations – Our features should not come at the cost of poor performances. They are designed to help customers to get better performances, hence the attention to memory allocation and algorithms used

- API surface area changes – To both ensure changes are moving toward a better architecture and make sure we did not miss a design constraint from the contributor needs

Enter the AI Code Review Assistant

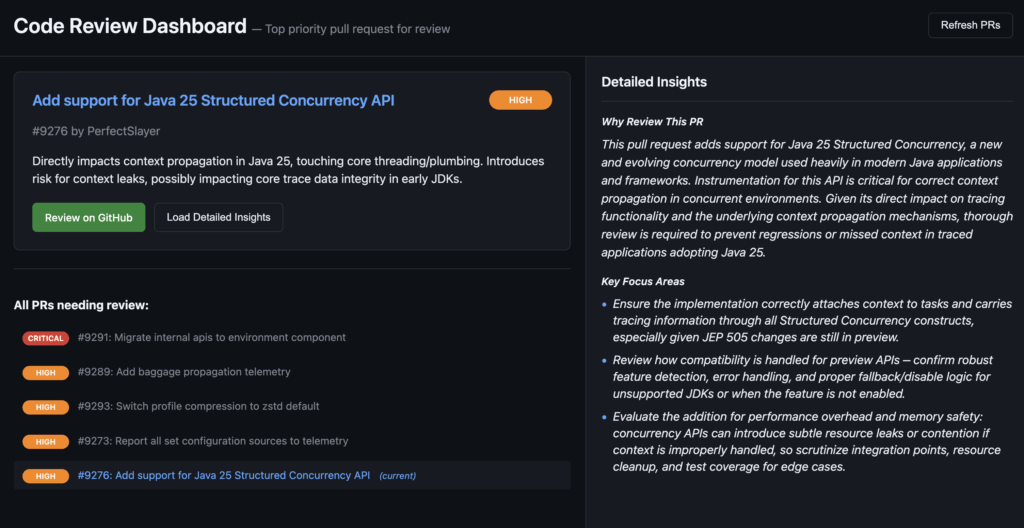

Instead of fighting AI’s limitations, I decided to work with its strengths. I built a web application that uses AI to analyze and prioritize pull requests, giving me a tactical approach to code review time.

The system works by:

- Connecting to GitHub via MCP (Model Context Protocol) to fetch recent PR data

- AI Analysis using LangChain4j and OpenAI models to evaluate each PR based on predefined criteria

- Smart Prioritization ranking PRs by impact, risk, and urgency

- Clean Web Interface built with Quarkus and Qute templates for easy consumption

Finding AI’s Sweet Spot

The application has changed how I approach code reviews. Instead of chronologically going through PRs or relying on simple heuristics, I now have a tool that helps me focus on what matters most. The system considers factors I might overlook with a quick glance and provides consistent prioritization across different types of changes.

This project reinforced my belief that AI works best when:

- The problem has clear criteria – Code review prioritization has well-defined factors that can be consistently applied

- Context understanding matters more than code generation – AI excels at parsing PR metadata, titles, descriptions, and change diffs to identify patterns

- Human judgment remains crucial – AI suggests priorities, but I make the final review decisions based on domain expertise

- Transparency is key – Showing the reasoning is needed to ensure AI’s suggestions remain correct, and helps me learn from its analysis

Most importantly, it respects my time. By spending a few seconds understanding the AI’s reasoning, I can make better decisions about where to invest my 60-90 minutes of daily review time. The tool doesn’t replace my judgment, it augments it with insights and analysis that used to consume review time and energy.

Conclusion

AI isn’t ready to replace developers, but it can be a powerful tool when used thoughtfully. By focusing on AI’s strengths like pattern recognition, context understanding, and reasoning, rather than trying to make it write code, we can build tools that genuinely improve our daily workflows.

The code review assistant proves that the most valuable AI applications might not be the ones the ones they try to sold us like code generation, but the ones that help us make better decisions about the code we already have.